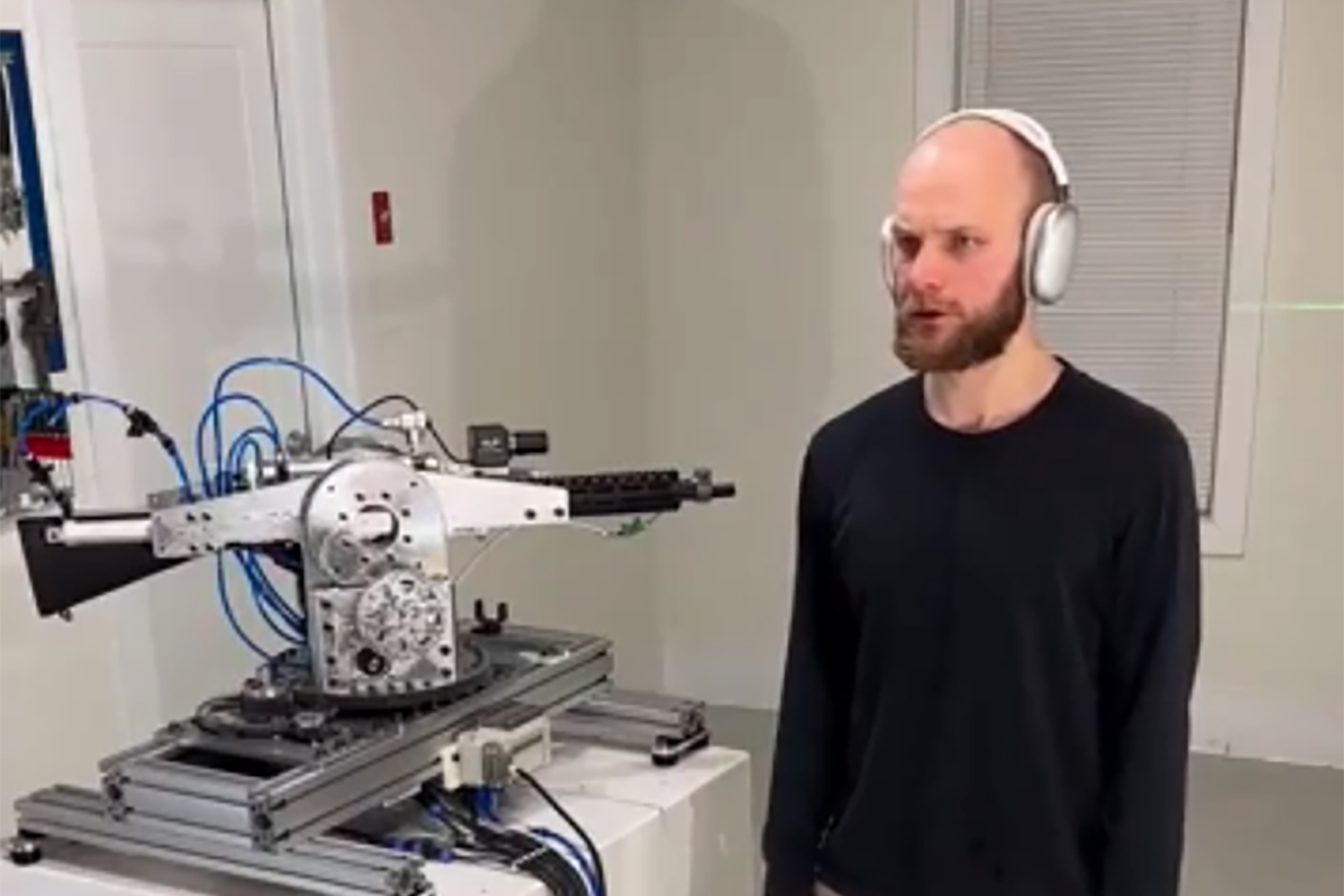

OpenAI has stopped a programmer who constructed a gadget capable of replying to ChatGPT inquiries to point and activate an automated firearm. The invention became viral following a video on Reddit depicting its creator vocalizing shooting instructions, after which a firearm next to him rapidly started targeting and discharging at nearby barriers.

“ChatGPT, we’re facing an assault from the front left and front right,” the programmer mentioned to the system in the footage. “Act accordingly.” The pace and precision with which the firearm reacts is remarkable, leveraging OpenAI’s Realtime API to comprehend input and then convey instructions the mechanism can grasp. It would merely need straightforward training for ChatGPT to get a command like “turn left” and decode it into a machine-readable script.

In a remark to Futurism, OpenAI stated it had watched the video and terminated the developer responsible. “We actively detected this infraction of our policies and informed the developer to stop this endeavor prior to getting your question,” the firm expressed to the outlet. Perhaps OpenAI CEO Sam Altman was correct that AI could obliterate humanity. Or maybe safeguards similar to how photocopying devices decline to replicate banknotes are necessary.

OpenAI realtime API linked to a gun

byu/MetaKnowing inDamnthatsinteresting

The possibility of mechanizing deadly armaments is a worry that detractors have expressed regarding AI technology like that created by OpenAI. The entity’s multi-modal models possess the ability to decode audio and visual cues to comprehend a person’s environment and reply to queries concerning what they observe. Autonomous drones are already being devised that could be employed in combat zones to identify and eliminate targets with no human intervention. That is, undoubtedly, a war crime, and risks humans becoming complacent, allowing an AI to decide and making it difficult to hold anyone responsible.

The apprehension doesn’t seem purely hypothetical either. A recent analysis by the Washington Post highlighted that Israel has already employed AI to choose bombing targets, sometimes without discrimination. “Soldiers insufficiently trained in applying the technology attacked human targets without verifying Lavender’s predictions at all” the narrative mentions, referring to an AI application. “Occasionally the only verification needed was that the target was male.”

Advocates for AI in military contexts argue it will enhance soldier safety by enabling them to remain away from the frontlines and neutralize targets, like missile depots, or conduct reconnaissance remotely. Additionally, AI-powered drones may attack with accuracy. However, it depends on their application. Critics suggest the U.S. should improve at disrupting enemy communication networks instead, to complicate efforts for adversaries like Russia to deploy their own drones or nuclear weapons.

OpenAI forbids the utilization of its tools to design or deploy weapons, or to “automate certain systems affecting personal safety.” However, the organization last year declared a collaboration with defense-tech company Anduril, a manufacturer of AI-driven drones and missiles, to develop systems capable of thwarting drone assaults. The firm claims it will “swiftly compile time-sensitive intelligence, lessen the burden on human operators, and enhance situational awareness.”

It’s not difficult to see why tech companies are keen on entering the realm of warfare. The U.S. allocates nearly a trillion dollars each year to defense, and reducing that budget remains an unpopular notion in Congress. With President-elect Trump appointing conservative-leaning tech individuals like Elon Musk and David Sacks to his cabinet, a new range of defense tech entities are predicted to reap notable gains and possibly replace existing defense giants like Lockheed Martin.

Even though OpenAI is preventing its clients from using its AI for weapon construction, there exists numerous open-source models that could be utilized for similar purposes. Additionally, the capability to 3D print weapon components—which is what authorities believe was executed by alleged UnitedHealthcare shooter Luigi Mangione—makes creating DIY autonomous deadly machines from the comfort of one’s residence alarmingly effortless.