Investigators at Stanford University compensated 1,052 individuals $60 for reading the opening two lines of The Great Gatsby into an application. Afterward, an AI that resembled a 2D character from a vintage Final Fantasy game on SNES requested participants to narrate their life stories. The scholars utilized those interviews to develop an AI they claim mimics the participants’ behavior with 85% precision.

The research, named Generative Agent Simulations of 1,000 People, signifies a collaborative effort between Stanford and researchers associated with Google’s DeepMind AI investigation unit. The proposition is that forming AI agents inspired by random individuals could aid policymakers and business leaders in comprehending the populace better. Why depend on focus groups or survey the public when you can engage with them momentarily, generate an LLM from that dialogue, and retain their reflections and viewpoints indefinitely? Or, almost as accurately as the LLM can reconstruct those reflections and sentiments.

“This study lays the groundwork for innovative tools capable of probing individual and collective actions,” the research abstract noted.

“How might, for example, a varied group of people respond to fresh public health strategies and communications, react to product launches, or deal with major disruptions?” the study continued. “When simulated persons merge into groups, these simulations could assist in trial interventions, forging complex theories capturing subtle causal and contextual interactions, and broaden our perception of entities like institutions and networks across domains like economics, sociology, organizations, and political science.”

All these opportunities are based on a two-hour discussion provided to an LLM, which mostly addressed questions akin to their actual-life counterparts.

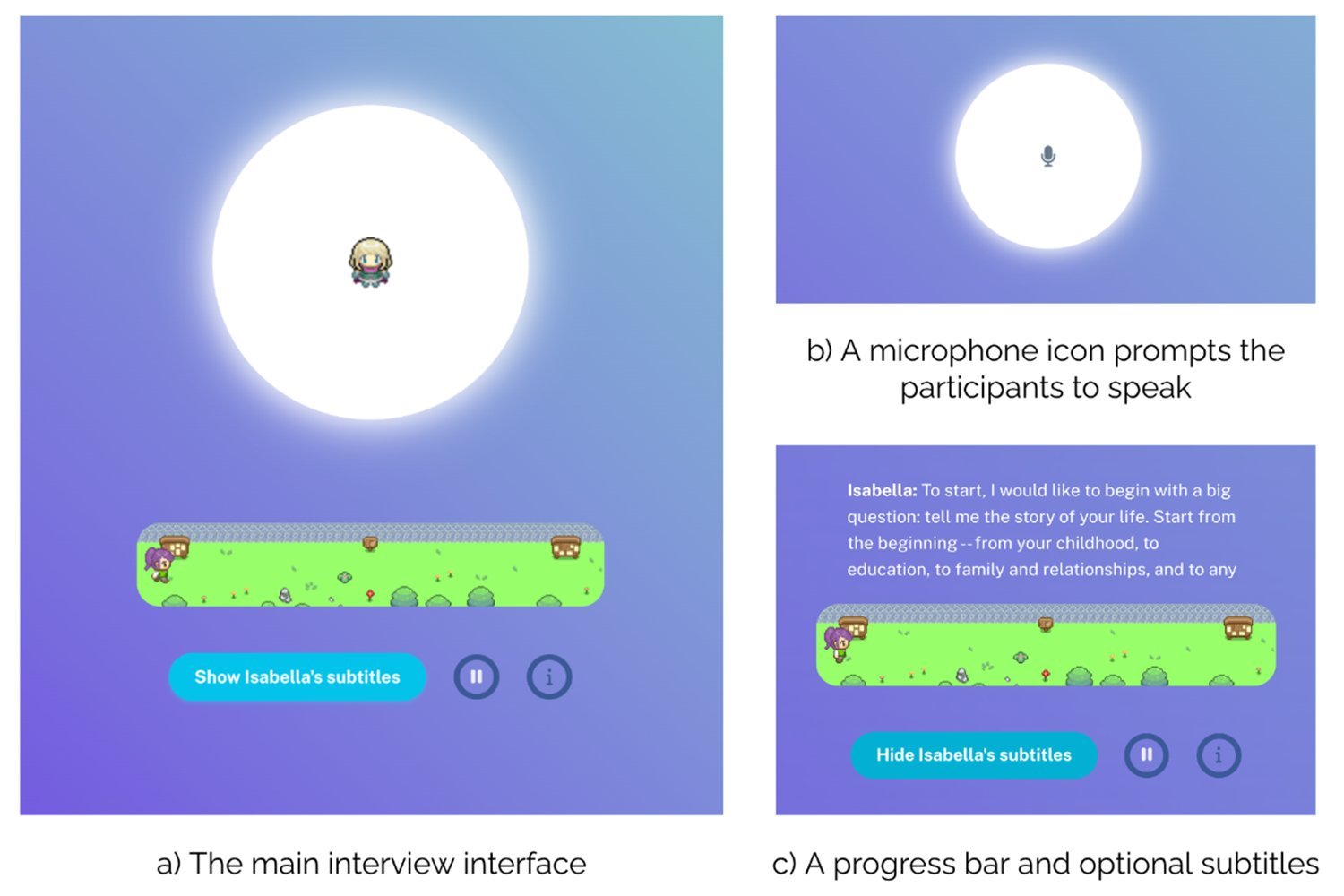

A large part of the procedure was automated. The university engaged Bovitz, a market research organization, to recruit participants. The objective was to secure a comprehensive slice of the U.S. populace, as extensive as feasible within the constraint of 1,000 individuals. To execute the study, users set up an account on a specially created interface, fashioned a 2D sprite character, and initiated communication with an AI interviewer.

The manner and questions of the interview are a revised form of those used by the American Voices Project, a combined endeavor between Stanford and Princeton University focused on interviewing individuals across the nation.

Each session commenced with participants reciting the first two sentences of The Great Gatsby (“In my younger and more vulnerable years, my father gave me advice I’ve pondered since. ‘Whenever you feel like criticizing anyone,’ he advised, ‘just bear in mind that all the people in this world haven’t had the advantages that you’ve had.’”) to adjust the audio quality.

The research protocol stated, “The interview interface illustrated the 2-D sprite avatar representing the interviewer agent centrally, with the participant’s avatar depicted at the bottom, advancing towards a milestone to symbolize progress. When the AI interviewer agent communicated, a rhythmic animation of the central circle with the interviewer avatar signaled it.”

The average two-hour interviews resulted in transcripts measuring 6,491 words. It inquired about race, gender, politics, income, social media engagement, occupational stress, and family structure. The researchers have shared the script and the questions employed by the AI.

These sub-10,000 word transcripts were subsequently introduced into another LLM by the researchers to generate agents intended to mirror the participants. Both the actual participants and AI replicas were afterward subjected to additional queries and economic tests to evaluate their behaviors. The study mentioned, “When an agent is queried, the entire interview transcript is embedded in the model prompt, guiding the model to emulate the person based on their interview data.”

This aspect of the procedure was kept as controlled as possible. Scholars applied the General Social Survey (GSS) and the Big Five Personality Inventory (BFI) to ascertain how closely the LLMs mirrored their origin. They further guided both participants and the LLMs through five economic simulations to see how they performed.

Outcomes varied. The AI agents answered about 85% of the queries the same way as the real participants on the GSS. They reached 80% on the BFI. However, the figures fell when the agents engaged in economic ventures. Researchers incentivized the real participants with monetary rewards for playing challenges like the Prisoner’s Dilemma and The Dictator’s Game.

In the Prisoner’s Dilemma, participants have the option to collaborate to mutually benefit or betray their partner for a greater reward. In The Dictator’s Game, they decide how to distribute resources to others. The live participants earned additional money on top of the initial $60 for engaging in these.

When confronted with these economic tasks, the AI replicas didn’t replicate their human counterparts as well. “On average, the generative agents accomplished a normalized correlation of 0.66,” or roughly 60%.

The complete document is worth exploring if you are intrigued by academic perspectives on AI agents and public opinion. Scholars efficiently condensed a person’s character into an LLM behaving similarly in a brief span. With adequate resources, they might significantly close the gap between the two.

This, however, is concerning. Not due to an aversion to reducing the human essence to data, but out of concern that this technology might be misused. We have observed less sophisticated LLMs exploiting public records to deceive elders into disclosing bank details by posing as AI familial contacts over quick calls. What is the implication when these systems are provided a script? What ensues when they gain access to crafted personas drawn from social media patterns and other public data?

What transpires when corporations or politicians determine public desires and necessities, not from direct input, but from an estimation of such?