Nvidia captured the spotlight at CES 2025 with the unveiling of the RTX 5090, and although there’s been a lot of talk about the card’s $2,000 cost, it introduces a wealth of new technology. Foremost among them is DLSS 4, which introduces multi-frame production to Nvidia’s GPUs, providing a 4X boost in efficiency in over 75 titles immediately when Nvidia’s newest RTX 50-series GPUs become available.

There’s been far too much confusion about how DLSS 4 genuinely functions, though. Between confusing statements from Nvidia’s CEO and a major overhaul of how DLSS operates, it’s not surprising there’s been misinformation circulating about the new technology, its capabilities, and, importantly, its limitations.

Let’s clarify the facts, as much as we can before Nvidia’s freshly released graphics cards arrive and we all get to witness what DLSS 4 truly offers firsthand.

Receive your weekly dive into the tech behind PC gaming

No, it doesn’t ‘predict the future’

A prominent source of confusion regarding how DLSS 4 functions comes from a remark made by Nvidia CEO Jensen Huang during a Q&A. Jarred Walton from Tom’s Hardware inquired about the technicalities of DLSS 4, and Huang flatly denied that DLSS 4 employs frame interpolation. Huang commented that DLSS 4 “predicts the future,” unlike “interpolating the past.” It’s an eye-catching statement, no doubt. Unfortunately, it’s inaccurate.

Huang has discoursed romantically about DLSS Frame Generation previously, and while this kind of rhetoric works for clarifying a technology like DLSS 4 to a broader public, it also incites misconceptions about how it truly functions. Following this assertion, I actually received several messages from readers pointing out that I was misinterpreting how DLSS 4 functions. It turns out, I’m not misunderstanding it, but I comprehend why there’s considerable confusion.

DLSS 4’s multi-frame generation involves a method known as frame interpolation. This is the identical method utilized in DLSS 3, and it is the same approach you’ll encounter in other frame generation solutions like Lossless Scaling and AMD’s FSR 3. Frame interpolation operates as follows: Your GPU renders two images, and then an algorithm intervenes to determine the variation between those frames. Afterward, it “produces” an intermediary frame based on the difference between the two rendered images.

And DLSS 4 relies on frame interpolation. There has been preliminary research on innovative methods to generate frames — notably, studies from Intel about frame extrapolation — but it’s still early days for that technology. There are some specifics I’m unable to disclose yet, but I’ve verified with several sources that DLSS 4 indeed employs frame interpolation. It’s logical, of course. These rendering methods don’t simply emerge spontaneously; there’s typically a legacy of scholarly research before any innovative rendering approach is transformed into a marketable asset like DLSS 4.

This doesn’t diminish what DLSS 4 is capable of achieving. While it might employ the same method as DLSS 3 in generating new frames, it should not divert your attention from the actual capabilities of DLSS 4.

Latency isn’t the issue you think it is

Comprehending why Nvidia opts to refrain from extensively addressing DLSS 4’s utilization of frame interpolation is not difficult. This reluctance stems from the introduction of latency by frame interpolation. The requirement is to render a pair of frames and subsequently execute interpolation prior to the initial frame being shown in the sequence, meaning any interpolation tool effectively incurs a modest delay. A common presumption implies a linear surge in latency with these extra frames, which is a misconception.

Questions arose from The Verge, expressing a desire to “observe the impact of the new frame generation technology on latency,” whereas TechSpot highlighted that “users worry multi-frame rendering might exacerbate the [latency] issue.” Such concerns oppose the increased number of “synthetic” frames DLSS 4 produces. One might assume producing a single frame causes a latency issue, so three frames should escalate it. However, the mechanism doesn’t operate that way.

This is precisely why it is critical to recognize that frame interpolation is employed by DLSS 4. The concept of playing with a delay remains unchanged when comparing DLSS 3’s single extra frame generation against DLSS 4’s triple generation — the method still entails rendering two frames and assessing their differences. Your latency does not substantially elevate regardless of whether one, two, or three supplementary frames are incorporated between the rendered duo. Despite the frame count interpolated, latency introduced by this procedure remains relatively constant.

To provide an example, consider gaming at a frame rate of 60 frames per second (fps). This translates into a gap of 16.6 milliseconds between every viewed frame. Applying DLSS 3, the frame rate climbs to 120 fps, yet the latency is not cut to 8.3ms. Visual smoothness is enhanced, but the gap between each constructed frame is still 16.6ms. Applying DLSS 4, you could leap to 240 fps, quadrupling the frame rate, but again the latency doesn’t plummet to 4.2ms. It remains at 16.6ms.

This provides a simplified perspective on PC latency — there are overheads for DLSS Frame Generation operations, also latency contributions from your display and input device — but it answers the understanding of base latency’s non-linear rise when inserting more frames into the interpolation cycle. Timing between each piece rendered remains unaltered. The latency encountered is predominantly derived from your base frame rate preceding DLSS Frame Generation, and the tool’s operational overhead.

Verification doesn’t have to solely stem from my explanation. Digital Foundry has evaluated DLSS 4, including latency considerations, discovering congruence with my elucidation. As Richard Leadbetter from Digital Foundry articulated, “The primary increment in latency appears primarily due to buffering an additional frame, yet augmenting more intermediate frames results in a minor latency escalation.” The extra slight latency arises as DLSS computes additional interframes within the rendered two, meaning the core latency alteration with DLSS 4 isn’t dramatically disparate from that with DLSS 3.

The latency predicament in DLSS 4 closely parallels that in DLSS 3. Operating with a minimal base frame rate creates a disparity between game reaction responsiveness and visual smoothness being perceived. This disruption grows more noticeable with DLSS 4, yet it doesn’t equate to a substantial latency surge consequently. Hence why Nvidia’s remarkable new Reflex 2 isn’t a necessity for DLSS 4; much like DLSS 3, only Reflex version one is needed for DLSS 4 integration by developers.

An entirely revised model

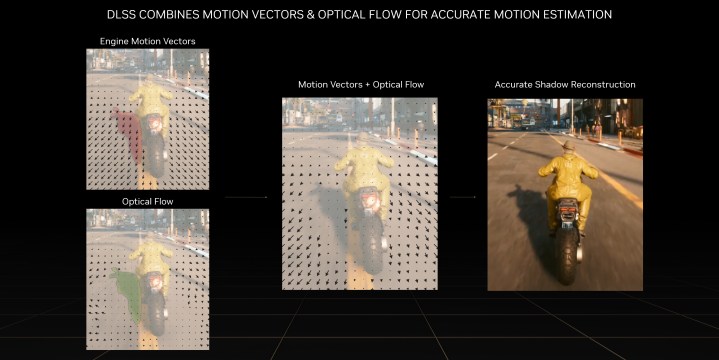

Simplifying DLSS 4’s functionality might cause assumptions of sameness, but that’s incorrect. DLSS 4 marks a stark leap from DLSS 3 due to its completely novel AI model. Or more accurately, distinct AI models. As Nvidia expounds, DLSS 4 utilizes five separate AI models for each frame rendered while executing Super Resolution, Ray Reconstruction, and Multi-Frame Generation, all of which must process within milliseconds.

Owing to DLSS 4’s specific requirements, Nvidia discarded its preceding Convolution Neural Network (CNN) and opted for a vision transformer model. This shift brings forth two major transformations. First is “self-attention,” where the model observes pixel importance across several frames. Such self-referential capability should empower the model to concentrate more on problematic segments, including thin details in Super Resolution displaying shimmering.

Transformer models’ scalability enables Nvidia to expand DLSS parameters far beyond prior CNN methods. The company reports that the latest transformer model indeed holds doubled parameters.

As demonstrated in the provided videos, Nvidia asserts these advancements confer improved stability and detail retention versus the earlier CNN method. Notably, these refinements are not restricted to just RTX 50-series GPUs. All RTX cards will harness the transformer model in DLSS 4-supported games, for relevant compatible features across different generations.

I have witnessed DLSS 4 in application several times, yet the actual evaluation for the functionality will transpire upon the launch of Nvidia’s forthcoming GPU generation. Then I’ll assess the feature’s performance across various games and scenarios to gauge its steadiness. Regardless, significant innovations abound with the feature, and as Nvidia has thus far detailed, these innovations intend to enhance DLSS altogether.